While the code in sympy.physics.quantum is currently very good at representations in discrete Hilbert spaces, representing expressions in continuous Hilbert spaces requires alot of legwork. The work I’ve done so far does a good job on representing simple expressions, but what about some long arbitrary expression?

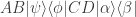

Brian gave a good example. Let’s say we want to represent the expression

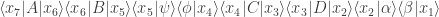

in the position basis. The easiest way to do this is just to insert unities in the appropriate places. So, we try to evaluate

where we then integrate over  and

and

Let’s now consider a simpler case, where it’s also easier to see directly the expected result. How might we go about representing the expression

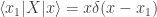

where  is an eigenket of the position operator

is an eigenket of the position operator  . The easiest way to do this is to just bring in a position bra from the left, to compute

. The easiest way to do this is to just bring in a position bra from the left, to compute

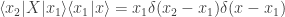

Let’s make sure this is consistent with what we get if we instead insert a unity like we would with any arbitrary expression:

If we then integrate over

Since  and

and  are just dummy variables, this is indeed consistent.

are just dummy variables, this is indeed consistent.

How do we then implement this in represent.py? Well, first, its useful to change the get_basis_kets in HermitianOperator a bit. Instead of just taking a number of kets, it now takes a number of kets AND a start index. That way, if we recursively keep track of the index in the options of represent, when rep_innerproduct or rep_expectation are called they know which basis kets they should be using for their calculations.

If we are keeping track of an index for kets, let’s think about when we should increment this index. There are a few key cases to consider (reading the expression from right to left):

- Ket followed by an operator

: Here, the ket and the operator should be using the same index for their kets. The representation of

: Here, the ket and the operator should be using the same index for their kets. The representation of  is

is  and representation of

and representation of  will start with the same ket (

will start with the same ket ( ). In this case, we don’t increment our index in between processing the ket and the operator.

). In this case, we don’t increment our index in between processing the ket and the operator.

- Bra followed by a ket

: In this case, the two representations should use different kets (there’s nowhere to insert a unity), so we should increment our index before processing the ket, thus calculating

: In this case, the two representations should use different kets (there’s nowhere to insert a unity), so we should increment our index before processing the ket, thus calculating

- Operator followed by a bra

: This is a case where we are inserting a unity, but we still need to increment the index we’re keeping track of. That’s because the representation of

: This is a case where we are inserting a unity, but we still need to increment the index we’re keeping track of. That’s because the representation of  starts with an

starts with an  ket on the right side, so the index when we take this representation is just 1. But, for representing the bra, we take $\langle x|x_2\rangle$, so we increment the index. Thus, we can take the representation as

ket on the right side, so the index when we take this representation is just 1. But, for representing the bra, we take $\langle x|x_2\rangle$, so we increment the index. Thus, we can take the representation as  .

.

- Operator followed by an operator

: We again have to increment the index even though we’re inserting a unity. The start index of the expectation value for the first operator will be one less than the second, so we end up calculating

: We again have to increment the index even though we’re inserting a unity. The start index of the expectation value for the first operator will be one less than the second, so we end up calculating  .

.

Now that we know how to insert unities, we also need to keep track of which kets we inserted so that we can integrate over the appropriate coordinates. In the case of the position basis, there will be many delta functions that come out of that, but most of them collapse out when you actually do the integral. I first tried using integrate() on the resulting expression directly, but found a bug where the delta functions were incorrectly collapsed. An example:

>>> x, x_1, x_2 = symbols(‘x,x_1,x_2’)

>>> f = x_2 * DiracDelta(x-x_2) * DiracDelta(x_2-x_1)

>>> integrate(f, (x_2, -oo, oo))

x_1

We expect, instead, either x*DiracDelta(x-x_1) or x_1*DiracDelta(x-x_1). As a first approach to this, and collapsing dirac deltas in general, I wrote a collapse_deltas function, which, given a list of unities, would collapse the delta functions. I later discovered that a function in the integrals module, deltaintegrate(), already accomplished much of this, so I instead created a new branch and pushed a bug fix for the bug I show above.

Phew. That was alot. The code for the updated represent logic can be seen in my represent branch here. The pull request for my DiracDelta bug fix is here.

Filed under: Uncategorized | 1 Comment »